Image copyright – Journal of Neuroscience

The Successor Representation: Its Computational Logic and Neural Substrates

Published in The Journal of Neuroscience (2022)

Authors

Samuel J. Gershman

Paper presented by Dr. Thomas Stalnaker and selected by the NIDA TDI Paper of the Month Committee.

Publication Brief Description

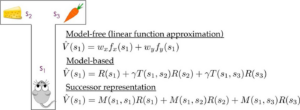

Traditional reinforcement learning algorithms can be classed broadly as either model-based, which maximize flexibility at the expense of efficiency, or model-free, which are maximally efficient but inflexible. Gershman revives and builds on an algorithm first proposed by Peter Dayan in 1993, called the successor representation, which preserves elements of the efficiency of a model-free algorithm, while maintaining flexibility regarding changes in values and goal states. The successor representation maintains, for each state in the environment, a set of probabilities that it will eventually lead to each other state. This map of predictive state relationships can be combined with current state reward values to derive decision values. Recent research has supported the idea that humans and other animals use a successor representation for learning and decision making under some conditions, and that it could in theory be built using mechanisms analogous to dopaminergic error signals. For these reasons, algorithms using the successor representation would be excellent candidates for modeling and testing addiction-related behavior.

The Successor Representation: Its Computational Logic and Neural Substrates Journal Article

In: J Neurosci, vol. 38, no. 33, pp. 7193–7200, 2018, ISSN: 1529-2401.