Featured Paper of the Month – September 2016

Sadacca, Brian F; Jones, Joshua L; Schoenbaum, Geoffrey

Midbrain dopamine neurons compute inferred and cached value prediction errors in a common framework. Journal Article

In: Elife, vol. 5, 2016, ISSN: 2050-084X (Electronic); 2050-084X (Linking).

@article{Sadacca2016,

title = {Midbrain dopamine neurons compute inferred and cached value prediction errors in a common framework.},

author = {Brian F Sadacca and Joshua L Jones and Geoffrey Schoenbaum},

url = {https://www.ncbi.nlm.nih.gov/pubmed/26949249},

doi = {10.7554/eLife.13665},

issn = {2050-084X (Electronic); 2050-084X (Linking)},

year = {2016},

date = {2016-03-07},

journal = {Elife},

volume = {5},

address = {Intramural Research program of the National Institute on Drug Abuse, National Institutes of Health, Bethesda, United States.},

abstract = {Midbrain dopamine neurons have been proposed to signal reward prediction errors as defined in temporal difference (TD) learning algorithms. While these models have been extremely powerful in interpreting dopamine activity, they typically do not use value derived through inference in computing errors. This is important because much real world behavior - and thus many opportunities for error-driven learning - is based on such predictions. Here, we show that error-signaling rat dopamine neurons respond to the inferred, model-based value of cues that have not been paired with reward and do so in the same framework as they track the putative cached value of cues previously paired with reward. This suggests that dopamine neurons access a wider variety of information than contemplated by standard TD models and that, while their firing conforms to predictions of TD models in some cases, they may not be restricted to signaling errors from TD predictions.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

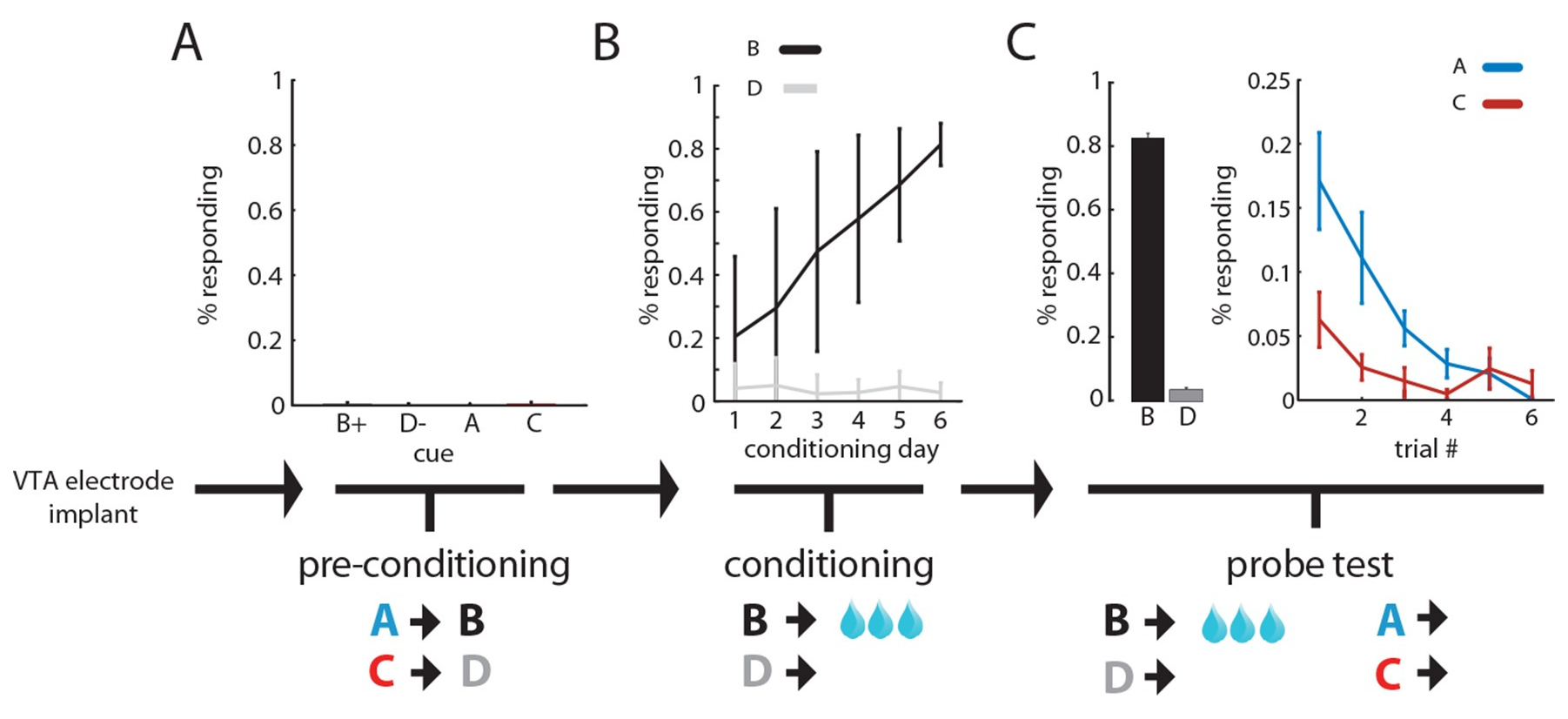

Midbrain dopamine neurons have been proposed to signal reward prediction errors as defined in temporal difference (TD) learning algorithms. While these models have been extremely powerful in interpreting dopamine activity, they typically do not use value derived through inference in computing errors. This is important because much real world behavior - and thus many opportunities for error-driven learning - is based on such predictions. Here, we show that error-signaling rat dopamine neurons respond to the inferred, model-based value of cues that have not been paired with reward and do so in the same framework as they track the putative cached value of cues previously paired with reward. This suggests that dopamine neurons access a wider variety of information than contemplated by standard TD models and that, while their firing conforms to predictions of TD models in some cases, they may not be restricted to signaling errors from TD predictions.